How To Reduce Cyclomatic Complexity In Your Code

Cyclomatic complexity is not just a coding metric. It reflects how many independent logical paths exist inside your functions, which directly impacts how hard code is to test, maintain, and safely change. The higher the complexity, the more branching logic exists, meaning more test cases, higher defect risk, slower PR reviews, and painful refactors down the road. Many engineering teams start flagging functions above 10–15 complexity because they tend to introduce instability and slow delivery.

Reducing cyclomatic complexity helps teams ship with confidence, improve readability, strengthen system reliability, and support scalable CI/CD practices instead of firefighting fragile code.

In this article, we will break down why reducing cyclomatic complexity improves stability and maintainability and walk you through proven techniques with examples you can start using today.

Key Takeaways

Cyclomatic complexity measures how many independent execution paths exist in your code; higher numbers mean harder testing, higher risk, and slower maintenance.

Common causes include deep nesting, large switch chains, boolean flags, duplicated code, and functions doing too many things.

Practical reduction strategies include breaking large functions into smaller ones, using early returns, replacing conditionals with design patterns, removing dead code, and eliminating flag arguments.

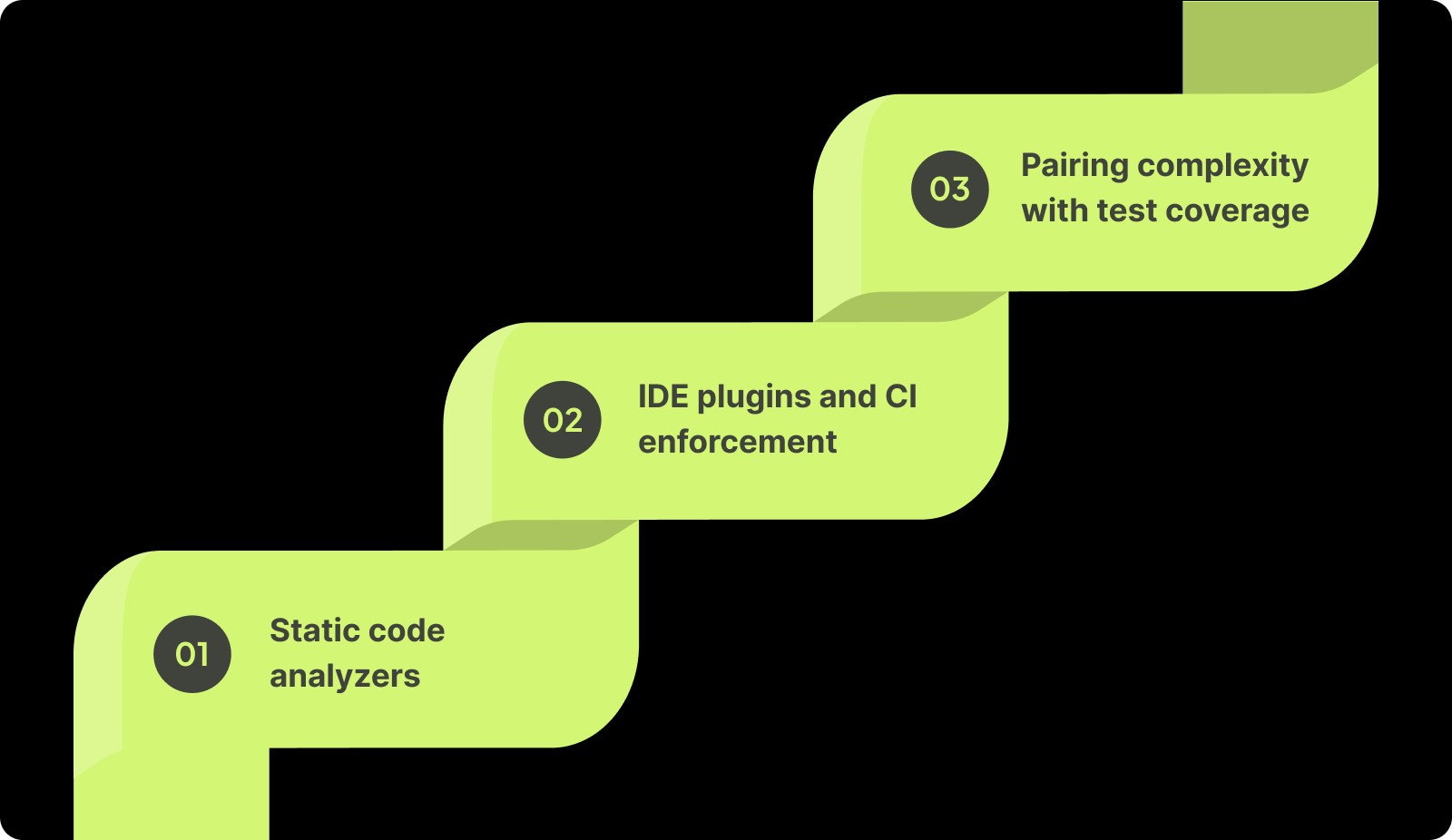

Sustainable improvement requires automation: static analyzers, IDE alerts, CI thresholds, and pairing complexity metrics with test coverage to prioritize real risk areas.

Entelligence AI helps teams stay ahead of complexity by surfacing complexity changes early, providing PR-level visibility, and offering context-driven insights to keep codebases clean and maintainable.

What Is Cyclomatic Complexity?

Cyclomatic complexity is a structural code metric that quantifies how many independent execution paths exist in a program. In practical terms, it counts the number of decision points in your code such as if, switch, for, while, catch, logical operators, and branching constructs to estimate how complicated the control flow is.

The higher the number, the more branching logic your code contains, and the harder it becomes to understand, reason about, and test.

How it’s calculated

Cyclomatic complexity is derived from the control-flow graph of your program:

Nodes = code blocks

Edges = possible control flow transitions

The formula most teams reference is:

M = E − N + 2

Where:

M = Cyclomatic complexity

E = Number of edges

N = Number of nodes

Modern tools automatically compute this, but the concept matters: every conditional branch adds another execution path the team must account for.

Many engineering teams benchmark acceptable thresholds. Common guidance from static analysis platforms:

1–10 → simple, maintainable

11–20 → complex, needs closer review

>20 → high-risk, hard to maintain, likely needs refactoring

Why developers actually care

Cyclomatic complexity impacts day-to-day engineering reality.

Testing effort increases: Each path requires dedicated coverage. Higher complexity = more tests to achieve confidence.

Bug risk rises: More branches mean more edge cases, making failures more likely in production.

Maintenance slows down: Developers spend longer understanding intent, which hurts onboarding, refactoring, and delivery speed.

Security exposure grows: Complex branching creates blind spots and makes security reviews harder.

That’s why many engineering orgs define complexity budgets in CI pipelines, treat high-complexity modules as refactor candidates, and block merges when thresholds exceed policy.

The next step is understanding what “bad complexity” actually looks like in real code and how it creeps in faster than teams expect.

Also Read: How to Conduct a Code Quality Audit: A Comprehensive Guide

Common Code Patterns That Increase Cyclomatic Complexity

Cyclomatic complexity rarely explodes because of “bad developers.” It typically grows from everyday control structures that compound decision paths faster than teams realize. These patterns are the most frequent contributors.

Deeply nested control structures

When logic gets wrapped inside multiple layers of if, else if, else, for, and while, the number of execution paths doesn’t increase linearly, it compounds. Each nesting level adds another layer of branching, which:

makes the execution order harder to reason about

forces test suites to account for multiple interdependent states

increases the likelihood of missed edge cases

Teams often see this in “god functions,” business rules coded inline, or defensive programming overload. Most static analysis guidance suggests flattening nested conditionals before anything else because nested branching tends to be the primary driver of complexity growth.

Multi-way branching (large switch–case logic)

switch or large conditional chains (if–else if–else) seem organized, but each case represents another independent path the system must handle. The impact is clear in real engineering workflows:

Unit tests expand significantly because each case requires scenario coverage

Future changes are risky because modifying one branch can unintentionally affect another

The codebase becomes rigid and teams hesitate to touch it

This is common in feature toggles, state machines, controller logic, and legacy systems that evolved without modularization. Many teams set policy thresholds because long switch blocks are one of the fastest ways to inflate complexity.

Boolean flags and conditional parameters

Boolean or “mode” parameters (processData(true)) silently double execution logic inside functions. What looks like a single method is actually multiple behaviors hidden inside one block of code. This creates:

hidden branching paths that aren’t obvious when scanning code

higher testing overhead because both flag states must be validated

unclear intent: future maintainers must decode why a flag exists

Linters and code quality platforms repeatedly warn about this pattern because it embeds conditional logic where the separation of responsibility would be clearer.

Repeated, dead, or obsolete code

Duplicated logic and unused code don’t only clutter the repository. They inflate measured complexity and operational risk.

Repeated logic multiplies testing obligation across multiple locations

Unreferenced logic still increases structural path count in analysis tools

Future refactoring becomes dangerous because it's unclear what is safe to remove

Most high-performing teams aggressively remove or centralize duplicated logic because every duplicate effectively adds another branch surface that needs maintenance, review, and test investment.

Now that we have identified which coding patterns typically drive complexity up, the next step is understanding how to actively reduce cyclomatic complexity without breaking functionality using techniques that engineering teams actually rely on in production.

Practical Techniques to Reduce Cyclomatic Complexity

Lowering cyclomatic complexity goes beyond writing “shorter code.” It’s about reshaping logic so behavior is clearer, paths are predictable, and each decision is intentional rather than incidental. These are the approaches high-performing engineering teams actually use in production systems.

1. Break large functions into smaller, purpose-driven units

Functions that try to “handle everything” grow in complexity rapidly because every responsibility brings its own decision paths. Splitting logic into smaller, single-purpose functions helps with the following:

Caps the number of branches inside any one block

Isolates behavior so each unit has fewer execution paths to test

Improves reuse and reduces the chances of duplicated branching later

Top-performing teams typically aim for functions that do exactly one thing (SRP), because once a function stops being conceptually simple, its complexity almost always follows.

2. Use early returns to eliminate deep nesting

Most complexity spikes happen when logic collapses inward, every condition nests inside another. Teams reduce this by using guard clauses and early exits, which flatten execution flow instead of drilling deeper into nested blocks, make failure and edge handling explicit at the beginning, and leave the “happy path” readable instead of buried inside layers of conditions.

This is one of the most recommended real-world refactoring steps because it reduces complexity without redesigning the architecture.

3. Replace sprawling conditionals with polymorphism or strategy patterns

Long if/else or switch blocks are often a signal that multiple behaviors are being forced into a single decision hub. Object-oriented teams reduce complexity by moving behavior to the objects themselves using patterns such as Strategy, Polymorphism, or State. This approach helps with the following:

Removes branch logic from core flow

Distributes behavior across dedicated components

Keeps future extension manageable without adding new branches

Instead of stacking more conditions, teams add new implementations and complexity stops compounding in one place.

4. Remove dead, obsolete, and duplicated code

Static analysis platforms consistently rank duplication and unused paths as major contributors to inflated complexity scores. Cleaning them up has an immediate measurable impact because:

every duplicate block creates another branch surface to maintain and test

abandoned methods still inflate measured structural paths

redundancy magnifies risk during refactoring and deployments

Many engineering teams pair this with CI policies so duplicate paths never creep back in.

5. Reduce flag arguments and parameter stacks

Boolean flags and “mode” parameters are quiet complexity multipliers. They turn single functions into two or more behavioral branches hidden behind a simple parameter. Teams reduce complexity here by:

splitting multi-behaviour functions into separate explicit functions

grouping related parameters into domain objects

ensuring method signatures describe behavior instead of hiding it

This prevents functions from secretly acting like multiple execution engines under the hood.

Refactoring reduces complexity at the source, but sustainable improvement depends on being able to measure, monitor, and enforce lower complexity as systems evolve, which is where intelligent tooling becomes essential.

Also Read: Top 10 Engineering Metrics to Track in 2025

Tools and Automation for Monitoring Cyclomatic Complexity

Reducing cyclomatic complexity only works if you can see it happening. Teams that succeed don’t rely on intuition or code reviews alone. They track complexity automatically across repositories, incrementally enforce thresholds, and create visibility that keeps developers accountable without slowing delivery.

Static code analyzers

Static analysis tools remain the most reliable way engineering teams quantify cyclomatic complexity in production environments. Platforms such as SonarQube, Codacy, NDepend, Snyk Code, and similar enterprise analyzers calculate complexity per file, function, and module, then score them against quality thresholds.

These tools highlight specific blocks driving the score, flag “hotspots” that combine high complexity with frequent change, and help teams prioritize what actually matters instead of treating all complexity equally.

IDE plugins and CI enforcement

High-performing teams shift complexity monitoring left. Instead of allowing complex code to enter the repo and fixing it later, they enforce standards at development time. Many IDEs (VS Code, IntelliJ, Rider, Visual Studio) surface complexity warnings inline as developers write code.

Meanwhile, CI pipelines integrate complexity checks so pull requests fail if they exceed an agreed threshold, often 10 or 15 for critical functions. This turns complexity management into a continuous discipline rather than an occasional clean-up exercise.

Pairing complexity with test coverage

Cyclomatic complexity is most meaningful when viewed alongside test coverage. High-complexity code with weak testing is where failures, regressions, and production incidents typically originate. Teams combine these signals to:

identify high-risk functions requiring more unit tests

prioritize refactors where complexity and churn are both high

justify engineering investment with data rather than opinion

Many engineering platforms now show these metrics side by side so leaders can see not just how complex code is but how fragile it is.

Best Practices for Keeping Complexity Low

Beyond specific techniques, adopting healthy coding practices prevents unnecessary complexity from building up in the first place.

Make code reviews explicitly evaluate complexity, not just correctness

Top-performing teams review logic structure, not only “does it work.” Reviewers actively question deep nesting, long methods, and sprawling conditionals rather than approving code that simply passes tests.

Define complexity thresholds and enforce them in CI

Many organizations set numerical thresholds (commonly 10 or less per function as suggested by McCabe research and widely adopted tools) and fail builds or flag PRs when complexity exceeds agreed limits. This prevents complexity creep instead of reacting to it later.

Refactor with tests in place

High-complexity code often hides risk. Teams that successfully reduce it pair refactoring with unit tests and regression checks so behavior stays stable while structure improves, reinforcing reliability while simplifying logic.

When disciplined coding habits combine with automated enforcement and real-time insight, complexity stops accumulating quietly in the background, and your codebase stays maintainable over time.

How Entelligence AI Helps Reduce Cyclomatic Complexity

Reducing cyclomatic complexity requires visibility, continuous feedback, and clear signals during development. Entelligence AI helps engineering teams stay ahead of complexity by detecting risk earlier and keeping reviewers informed.

Here’s how Entelligence AI supports this:

Real-time complexity awareness: Highlights complexity changes as code evolves so teams can address issues before they become long-term maintenance problems.

Context-driven guidance: Surfaces insights that help identify where functions can be simplified, logic flattened, or responsibilities separated.

PR-level visibility: Provides complexity indicators inside pull requests so reviewers instantly understand risk without hunting through code.

Try Entelligence AI in your workflow to catch complexity growth early and maintain cleaner, more predictable codebases.

Conclusion

Keeping cyclomatic complexity low helps teams write code that’s easier to understand, test, and maintain. By applying proven techniques like breaking long methods into small ones, eliminating unnecessary decision paths, and leveraging design patterns, developers reduce risk and accelerate delivery. Tooling and continuous measurement are essential to sustaining improvements.

Platforms like Entelligence AI go even further by automating complexity insights, integrating suggestions directly into developer workflows, and helping engineering teams act before problems surface.

Schedule a demo to see how Entelligence AI fits into your code review and refactoring workflows.

Frequently Asked Questions (FAQs)

1. What is cyclomatic complexity in code?

Cyclomatic complexity measures the number of linearly independent paths in code, representing how many distinct execution paths exist.

2. Why should I reduce cyclomatic complexity?

Lower complexity helps improve readability and maintainability and reduces the number of test cases needed for reliable coverage.

3. Is cyclomatic complexity always bad?

Not always. Sometimes a complex algorithm is inherently complex, but in most cases, high values signal code that may benefit from simplification.

4. How does refactoring reduce complexity?

Refactoring breaks large blocks with many decisions into smaller, purpose-specific functions, reducing independent paths and improving clarity.

5. Can tools measure cyclomatic complexity automatically?

Yes, static analyzers like SonarQube, Codacy, and NDepend compute complexity automatically and can integrate into CI/CD workflows.