Gemini 3 Flash vs GPT-5.2: Cost, Speed & Workflow Fit (2026)

Nesar

Jan 9, 2026

Workflow efficiency

Nesar

Jan 9, 2026

Workflow efficiency

Software development is moving fast, and teams need tools that keep up. AI is becoming a core part of coding, debugging, and collaboration. In fact, 82% of developers report using ChatGPT or GPT models, with 29% using Bard/Gemini, showing just how widespread AI has become in engineering workflows.

But not all AI works the same way. Some models are built for speed and instant suggestions, while others focus on careful reasoning and complex problem-solving.

In this blog, we will explore Gemini 3 Flash and GPT-5.2, comparing performance, costs, and workflow fit to help teams pick the right AI in 2026.

Overview

Gemini 3 Flash is positioned as a lower-cost, faster-iteration option for high-volume engineering workflows where response speed and throughput matter.

GPT-5.2 is positioned as a higher-cost model where deeper reasoning and higher-accuracy outputs can justify slower iteration.

Listed API pricing (check vendor pages for updates): Gemini 3 Flash shows $0.50 per 1M input tokens and $3.00 per 1M output tokens.

GPT-5.2 shows $1.75 per 1M input tokens and $14.00 per 1M output tokens.

The best choice depends on what your team optimizes for: fast iteration and cost control, or deeper reasoning for higher-stakes engineering tasks.

Understanding Gemini 3 Flash and GPT-5.2

Gemini 3 Flash and GPT-5.2 are both advanced AI models, but they are built for distinct engineering priorities. Knowing their core strengths helps you choose the right model for coding, debugging, and workflow efficiency in 2026.

What Gemini 3 Flash Is Built For?

Gemini 3 Flash is engineered for speed and large-scale reasoning. It excels in handling multi-repo codebases, long dependency chains, and real-time PR analysis. Its architecture prioritizes low-latency execution, making it ideal for rapid iteration in IDEs.

You can feed it diagrams, logs, or traces alongside code, and it will interpret them contextually, enabling developers like you to catch integration issues before merging.

What GPT-5.2 Is Designed to Do?

GPT-5.2 is optimized for depth and precision in reasoning-heavy tasks. It shines in algorithmic problem solving, complex refactoring, and debugging intricate code flows.

While its throughput is slightly lower than Gemini 3 Flash, it maintains high logical accuracy, making it suitable for critical code review, architectural suggestions, and long-form documentation generation.

Now that we understand the core design and strengths of Gemini 3 Flash and GPT-5.2, let’s explore how they perform in real-time coding speed and latency.

Also Read: Building Engineering Efficiency: 9 Proven Strategies for High-Performing Teams

Speed and Latency: Which Model Feels Faster in Practice

When your team is shipping code daily, speed directly affects developer efficiency. Gemini 3 Flash and GPT-5.2 differ significantly in how they handle real-time workflows and feedback loops.

Response Time in Real Development Workflows

Gemini 3 Flash typically fits workflows where teams want fast back-and-forth: quick PR suggestions, iterative code edits, and rapid interpretation of logs or configs.

GPT-5.2 typically fits workflows where teams accept slower responses in exchange for more deliberate, reasoning-heavy outputs, like complex debugging, architecture review support, or careful refactoring plans.

Impact on Developer Velocity and Feedback Loops

Gemini 3 Flash: Boosts PR resolution, detects conflicts in real time, and reduces context switching between coding and review tools, boosting overall engineering throughput for fast-moving teams.

GPT-5.2: With GPT-5.2, you get detailed, highly accurate feedback that catches subtle issues, but slower response times can extend review cycles. It’s ideal when your priority is accuracy and code quality over sheer speed.

Having seen how fast each model responds in practical workflows, the next step is examining how they think, reason, and solve complex engineering problems.

Reasoning and Intelligence: How Smart Are They Really

When it comes to solving complex engineering problems, how a model thinks can determine your code quality, review speed, and debugging efficiency. Gemini 3 Flash and GPT-5.2 take different approaches to reasoning, impacting both everyday coding tasks and multi-step problem solving.

Logical Reasoning and Problem Solving

Gemini 3 Flash: Excels at real-time logical evaluation, spotting inconsistencies, detecting anti-patterns, and suggesting fixes across multiple modules simultaneously. Its reasoning is tuned to practical engineering scenarios, like dependency conflicts, merge errors, and optimization opportunities, giving developers actionable insights instantly.

GPT-5.2: You’ll get careful, step-by-step reasoning that’s ideal for algorithmic challenges, refactoring, and critical bug analysis. While it may take a bit longer to process, it surfaces deep logic errors and architectural issues you might miss in everyday workflows.

Handling Complex, Multi-Step Tasks

Gemini 3 Flash: Manages multi-stage code flows, from function implementation to integration, maintaining context across modules and delivering real-time suggestions for each step.

GPT-5.2: Handles intricate, multi-step tasks by breaking them down logically, giving you clear guidance on each step. This is perfect when tackling critical refactors or end-to-end algorithm design, where precision matters more than speed.

Once we grasp their reasoning abilities, it’s time to evaluate how Gemini 3 Flash and GPT-5.2 translate that intelligence into code generation, refactoring, and debugging.

Also Read: 15 Leadership Strategies for Scaling Engineering Teams That Actually Work

Coding and Developer Performance

How a model writes, refactors, and debugs code directly affects developer efficiency and software quality. Gemini 3 Flash and GPT-5.2 differ in output speed, accuracy, and integration with complex codebases.

Feature | Gemini 3 Flash | GPT-5.2 |

Code Generation | Fast, context-aware generation for multi-file projects. Suggestions align with repository standards and dependencies automatically. | Deep, logic-driven generation suitable for complex algorithms and intricate system designs. Slightly slower but ensures precise output. |

Refactoring Quality | Inline refactoring recommendations with real-time detection of anti-patterns and optimization opportunities. | Step-by-step guidance for critical refactors, highlighting architectural improvements and logic corrections. |

Debugging & Testing | Surfaces runtime issues, integration errors, and missing tests instantly, helping teams catch and reduce bugs before code merges. | Detects subtle logic errors, edge cases, and test coverage gaps. Supports creating detailed test cases for high-assurance codebases. |

Understanding output quality is important, but how these models maintain context across large codebases and lengthy sessions can make or break workflow efficiency.

Context Window and Long-Form Understanding

When engineering teams work with large repositories or lengthy coding sessions, how an AI model remembers context and maintains coherence can affect your productivity. Gemini 3 Flash and GPT-5.2 handle long-form workflows differently.

Gemini 3 Flash

Gemini 3 Flash maintains context across multi-module repositories and long PR chains, remembering dependencies, variable states, and prior comments in real time. It keeps coherence in extended coding sessions, reducing repeated explanations and enabling seamless multi-file edits.

GPT-5.2

GPT-5.2 handles long-form code and documentation well, but its session memory can truncate over very large repositories, requiring occasional context refreshes. It excels at stepwise reasoning across files, though maintaining multi-session coherence is slower compared to Gemini 3 Flash.

Beyond text, modern development requires interpreting diagrams, logs, and structured data. Let’s see how Gemini 3 Flash and GPT-5.2 handle multimodal inputs.

Multimodal Capabilities: Beyond Text

Interpreting diagrams, architecture visuals, logs, and structured data directly impacts your efficiency and code quality in engineering. Gemini 3 Flash and GPT-5.2 handle these non-text inputs in different ways.

Gemini 3 Flash

Gemini 3 Flash can interpret diagrams, UMLs, architecture schematics, and flowcharts directly in your workflow, while also parsing logs, JSON outputs, and other structured data. You get real-time, actionable insights without switching tools, keeping your engineering flow uninterrupted.

GPT-5.2

With GPT-5.2, you can analyze diagrams and visual inputs, but you’ll often need to structure data or provide extra context for accurate interpretation. Handling logs and non-text inputs works, yet maintaining precision across complex formats can require careful prompting on your side.

Having explored practical capabilities, we now quantify their performance through benchmarks. This way, you can understand measurable strengths and limitations for real engineering tasks.

Benchmarks and Measured Performance

Benchmarks provide quantifiable insight into how each model performs across coding, reasoning, and software-related tasks. The trick is using the right benchmarks for your workflow (agentic coding vs PR review vs log triage vs long-context refactors), and not over-trusting any single score.

Headline Benchmark Table (Gemini 3 Flash vs GPT-5.2):

What You Care About | Benchmark | Gemini 3 Flash (Thinking) | GPT-5.2 (High Reasoning) | How To Interpret This For Engineering |

|---|---|---|---|---|

Real repo bug-fixing (agentic coding) | SWE-bench Verified (single attempt) | 78.0% | 80.0% | Both are extremely strong for “take an issue + repo → produce a patch.” GPT edges slightly. |

Terminal-style debugging & CLI workflows | Terminal-Bench 2.0 | 47.6% | — | Gemini has a published score here; GPT-5.2 wasn’t listed in the same table. |

Long-horizon “do a bunch of tool steps” tasks | Toolathlon | 49.4% | 46.3% | Gemini leads; useful signal for multi-step agent workflows (tool calls, structured subtasks). |

Multi-step workflows via MCP | MCP Atlas | 57.4% | 60.6% | GPT leads; relevant if your workflow is MCP-heavy (connectors, multi-tool orchestration). |

Competitive programming / algorithmic coding | LiveCodeBench Pro (Elo) | 2316 | 2393 | GPT leads; better signal for algorithmic reasoning + tricky coding under constraints. |

Long-context “needle in haystack” reliability | MRCR v2 (128k avg, 8-needle) | 67.2% | 81.9% | GPT has a big edge; matters for very large repos, long incident docs, or multi-file refactors. |

UI/screen understanding | ScreenSpot-Pro | 69.1% | 86.3% (with Python) | GPT’s score shown here uses a tool; still a strong advantage for UI-driven debugging and “read the screen” tasks. |

Log/charts/figures reasoning | CharXiv Reasoning (no tools) | 80.3% | 82.1% | Very close; both solid for technical charts, traces, and figure-style reasoning. |

“Hard novelty” reasoning | ARC-AGI-2 | 33.6% | 52.9% | GPT leads; this is more abstract than day-to-day engineering, but correlates with tricky reasoning. |

Science-grade QA | GPQA Diamond (no tools) | 90.4% | 92.4% | Both are frontier-level; GPT slightly higher. |

Pricing (API list price) | Input / Output per 1M tokens | $0.50 / $3.00 | $1.75 / $14.00 | Gemini is dramatically cheaper on list price; this matters if you’re doing high-volume CI, support, or agent loops. |

Sources for the benchmark numbers and the per-token prices in this table come from Google DeepMind’s published Gemini 3 Flash evaluation table (which includes GPT-5.2 comparison columns).

GPT-5.2’s SWE-Bench Verified (80.0%) and SWE-Bench Pro (55.6%) are also reported by OpenAI.

Key Benchmark Results Explained

Gemini 3 Flash’s strongest “engineering” signals

Agentic workflow strength: Toolathlon is higher (49.4% vs 46.3%), indicating stronger long-horizon execution when an agent must plan and repeatedly call tools.

Cost for scale: $0.50/$3.00 per 1M input/output tokens makes it easier to run lots of evals, CI helpers, or always-on assistants without flinching at the bill.

SWE-bench Verified is already elite: 78% is “top-tier coding agent” territory.

GPT-5.2’s strongest “engineering” signals

Long-context reliability advantage: MRCR v2 shows a large lead (81.9% vs 67.2%), which matters when you stuff huge repos, long postmortems, or multi-incident context into a single run.

Algorithmic/competition coding edge: LiveCodeBench Pro Elo is higher (2393 vs 2316), matching the “structured algorithm + correctness” strength you’d feel in thorny logic and refactor correctness.

Top SWE-bench Verified score: 80% vs 78% is a small but real edge on the most common public repo-fix benchmark.

SWE-Bench Pro (multi-language, more industrial): OpenAI reports 55.6% on SWE-Bench Pro, which is useful if your stack isn’t Python-centric. (Google doesn’t publish a Gemini 3 Flash SWE-Bench Pro number in the sources above.)

What Benchmarks Do And Don’t Tell You

Benchmarks are useful, but they don’t match your reality: your repo structure, your build system, your code review culture, your incident patterns, and your internal tooling.

Use benchmarks as a filter, not a verdict. The final call should come from a short internal eval on your workflows:

PR review quality: Does it catch real bugs, suggest better abstractions, and avoid nitpicks?

Debugging accuracy: Can it resolve a few real past incidents using your logs/runbooks without inventing facts?

Refactor correctness: Can it change real modules safely (tests pass, interfaces consistent, no silent behavior change)?

Tool discipline: When it’s unsure, does it ask for the missing file/log, or does it guess?

Cost and Scalability of these Platforms

When running AI models for large engineering workflows, understanding per-token costs, output efficiency, and scalability is key to predicting your operational budget. This also helps in avoiding unexpected overspend. Let's learn the cost through the following table:

Pricing Metric | Gemini 3 Flash | GPT‑5.2 |

Input Tokens (per 1M) | $0.50 | $1.75 |

Output Tokens (per 1M) | $3.00 | $14.00 |

Context Caching | $0.05 / Cached | $0.175 / Cached |

Batch / Async Tiers | ~50% lower with batch options | Cached input discounts available |

For budgeting, estimate monthly tokens across your top workflows (IDE assist, PR reviews, incident RCA, docs). Then model the cost using:

Monthly input tokens × input rate

Monthly output tokens × output rate

Note that pricing may change; please verify against the vendor pages.

Tooling, Integrations, And Workflow Fit

In practice, workflow fit is determined less by Gemini 3 Flash vs GPT-5.2 as “models” and more by how you deploy either one: IDE extensions, PR bots, CI checks, incident workflows, and how well the setup connects to your repos and ticketing.

So instead of assuming “Gemini 3 Flash plugs in” or “GPT-5.2 needs manual setup,” evaluate the integration path you’ll actually use for whichever model you pick.

The integration layers that matter for both Gemini 3 Flash and GPT-5.2:

IDE layer: in-editor assistance (code suggestions, refactors, explanations)

PR layer: review summaries, risk flags, lint/test guidance, policy checks

CI/CD layer: build/test gates, deploy verification, rollback triggers

Incident layer: log/trace correlation, runbooks, postmortem capture

Work management layer: Jira/Linear linking, ownership routing, Slack notifications

If You Want Fast Iteration (Low Context Switching)

For teams using Gemini 3 Flash or GPT-5.2 primarily for high-frequency work (PR flow + IDE help), prioritise a setup that reduces context switching:

IDE + PR bot combo so developers don’t jump between tools

CI posts actionable summaries back into the PR (not raw logs)

A standard “incident packet” template (recent deploys + key logs + top metrics) so prompts stay short

This is the best path if your goal is shorter feedback loops and faster PR cycles, regardless of the model you choose.

If You Want High-Assurance Reasoning (Max Context Preservation)

For teams using Gemini 3 Flash or GPT-5.2 for deeper debugging, architecture reviews, and critical refactors, prioritise workflows that preserve full context and remain repeatable:

Provide repo context systematically (relevant files, configs, dependencies)

Use structured prompts (same sections every time: constraints, assumptions, risks, test plan)

Create repeatable evaluation prompts so you can compare outputs over time

Keep humans in the loop for high-impact decisions (merge approvals, prod rollbacks)

This is the best path when your priority is accuracy, traceability, and consistency.

Bottom line: comparing Gemini 3 Flash vs GPT-5.2 on “workflow fit” should focus on the deployment setup (IDE + PR + CI + incident flow) more than the raw model label.

Now that workflow integration is clear, enterprise considerations like security, stability, and operational maturity dictate how these models perform at scale.

Also Read: 10 Proven Best Practices for Managing Technical Debt in Distributed Teams

Enterprise Readiness and Production Use

Choosing an AI model for your engineering org needs to have security, stability, and operational reliability when integrating into production systems at scale. Here’s how both model fares:

Gemini 3 Flash

Gemini 3 Flash comes with enterprise-grade security controls, including role-based access, encrypted data handling, and audit logs for code and documentation. Its architecture supports high-availability deployment, automated failover, and predictable scaling, ensuring uninterrupted code reviews, CI/CD checks, and multi-team collaboration.

GPT‑5.2

GPT‑5.2 offers strong encryption and compliance features, but enterprise deployment often requires additional configuration for secure API usage and integration with internal systems. While reliable, scaling across large orgs or sensitive codebases may demand extra infrastructure and monitoring, especially for mission-critical workflows.

Once we’ve assessed security, scalability, and workflow fit, we can compare scenarios to decide which AI model aligns best with your team’s priorities.

Which Model Is Right for Your Team?

Picking the right AI model means matching features, speed, and workflow fit to your engineering team’s specific needs, scale, and goals.

When Gemini 3 Flash Makes More Sense

Your team works with large codebases and needs fast, context-aware code reviews.

You require seamless IDE and CI/CD integration with minimal setup.

Security and enterprise-grade governance are essential.

Cost predictability matters for high-volume token processing, especially in code analysis, debugging, or automated documentation.

When GPT‑5.2 Is the Better Choice

Your focus is on complex algorithmic reasoning or structured problem-solving beyond everyday code generation.

You can invest time in custom integrations and agent workflows.

Occasional higher costs are acceptable for advanced output quality or specialized tasks.

Teams value flexibility in prompt-driven outputs over turnkey IDE integration.

Having matched models to team needs, the challenge remains: how to unify outputs into actionable insights. That’s where Entelligence AI simplifies engineering intelligence across workflows.

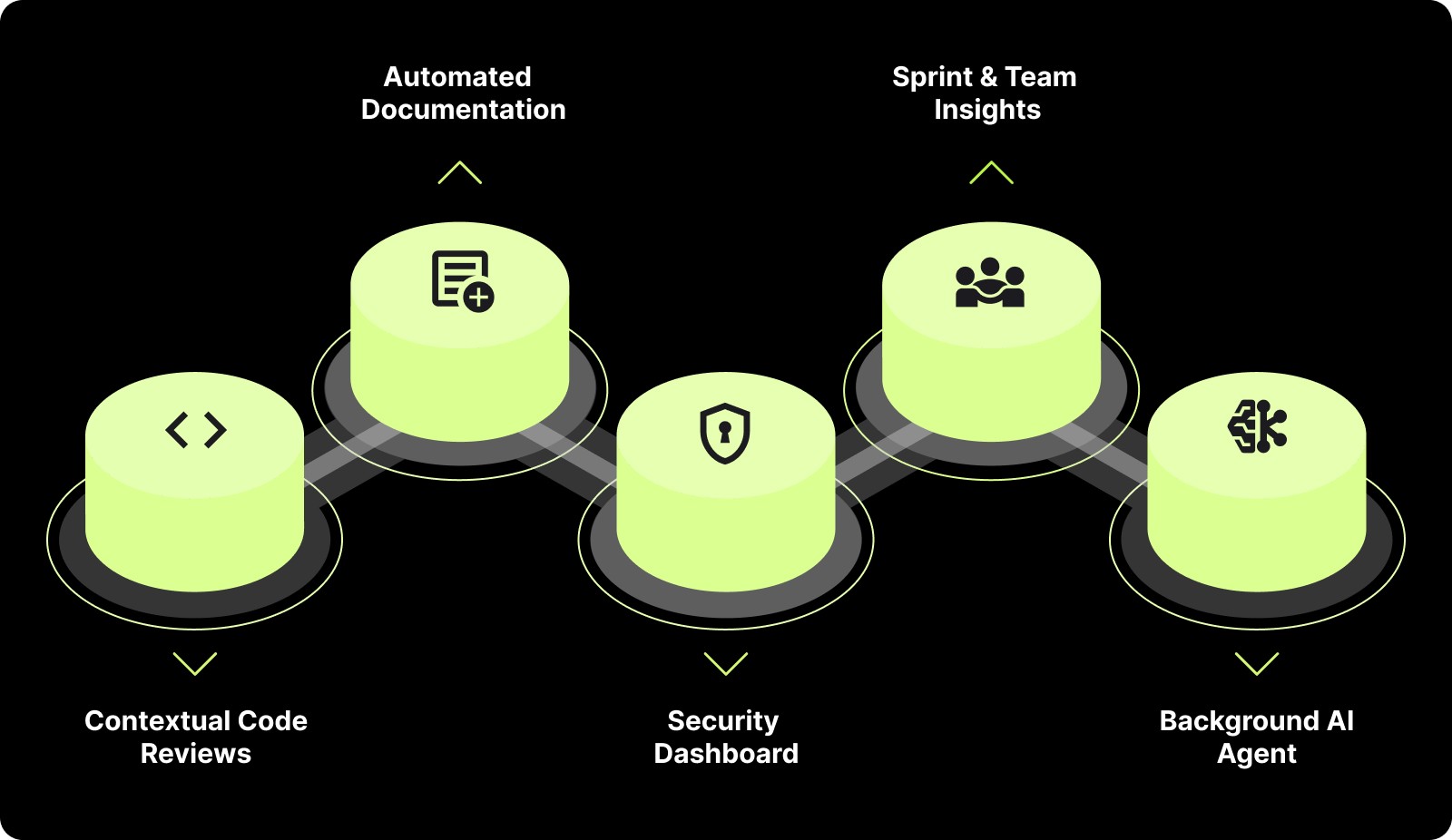

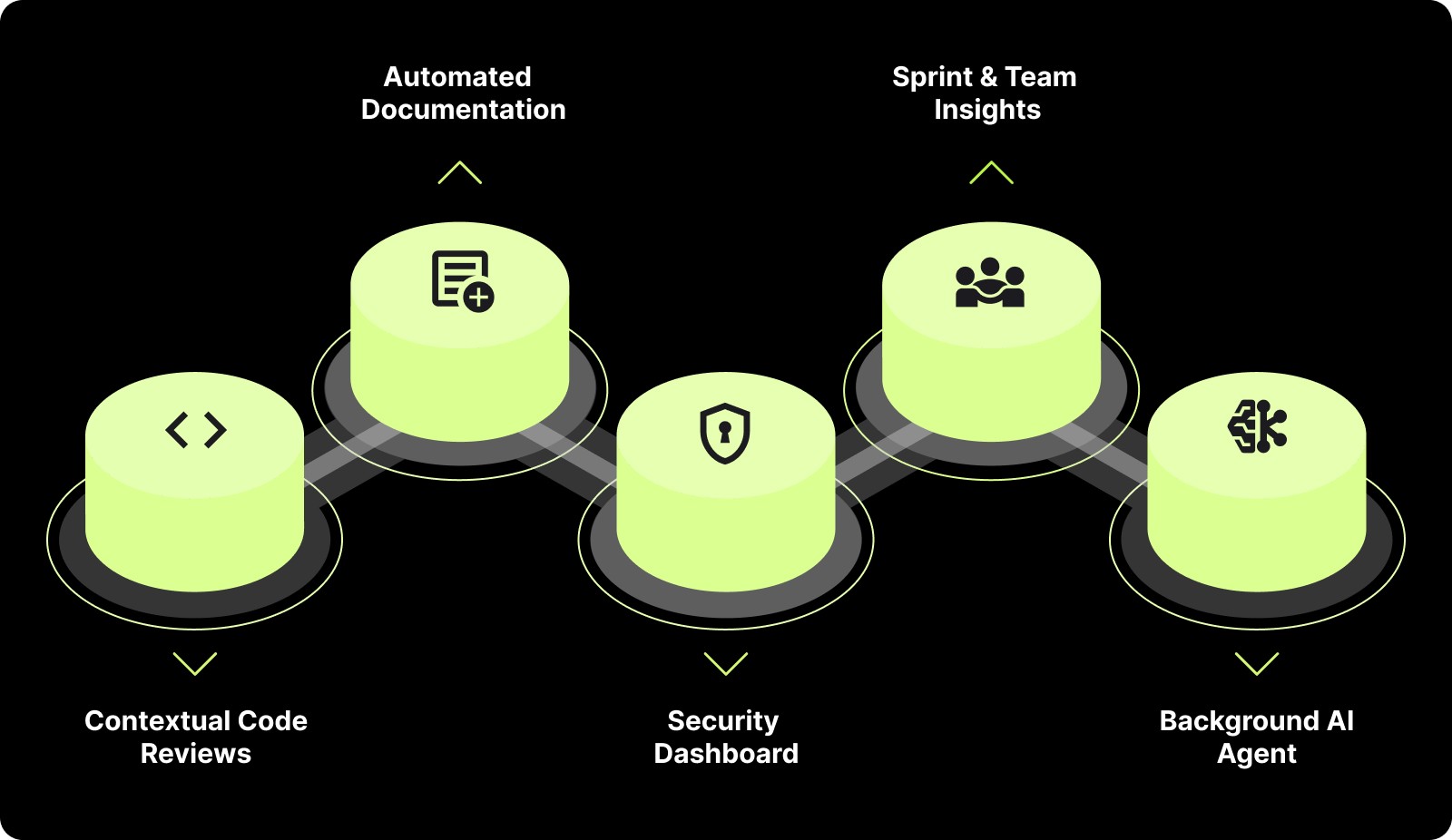

Where Entelligence AI Fits in the Stack?

When comparing Gemini 3 Flash and GPT-5.2, the challenge is turning AI outputs into actionable code, documentation, and team insights. Engineers often juggle multiple tools, slowing reviews, debugging, and sprint tracking.

Entelligence AI unifies this process by layering AI intelligence into your engineering workflow, giving you clarity, speed, and operational control across the org without switching between tools.

Key Features of Entelligence AI:

Contextual Code Reviews: Highlights critical issues, test gaps, and anti-patterns directly in your IDE, improving the actionable value of both Gemini 3 Flash and GPT-5.2 outputs.

Automated Documentation: Generates real-time, multi-repo architecture diagrams, API docs, and code explanations that stay in sync with ongoing changes.

Security Dashboard: Continuously scans for vulnerabilities, misconfigurations, and dependency risks, keeping AI-generated code production-safe.

Sprint & Team Insights: Tracks task completion, blockers, and developer contributions, turning model outputs into measurable team performance data.

Background AI Agent: Automates repetitive tasks like linting, refactoring, and PR prep, freeing developers to focus on high-value coding work.

By integrating Entelligence AI with your chosen AI model, you get both cutting-edge AI reasoning and real-world workflow efficiency. This makes Gemini 3 Flash or GPT-5.2 outputs actionable and production-ready.

Final Verdict: Choosing the Right Intelligence Layer

In 2026, both Gemini 3 Flash and GPT‑5.2 offer impressive reasoning, coding, and multimodal capabilities. Gemini 3 Flash excels in context retention, multi-step reasoning, and workflow integration, while GPT‑5.2 shines in structured problem-solving and algorithmic complexity. Your choice ultimately depends on team scale, project type, and operational priorities.

Entelligence AI complements either model by turning AI outputs into actionable engineering intelligence. It unifies code quality, automated documentation, security, and team performance insights. This way, you can move faster, reduce errors, and maintain full visibility across projects without juggling multiple tools.

Ready to see how Entelligence AI can improve your AI workflows and unify Gemini 3 Flash or GPT‑5.2 outputs into actionable engineering insights? Book a demo today to experience the full platform in action.

FAQs

1. Which one is better, Gemini or GPT?

There’s no universal winner; it depends on what your team prioritizes. Gemini 3 Flash is better suited for engineering teams that need speed, large-context handling, and tight integration with codebases and workflows. GPT-5.2 performs better when tasks demand deep reasoning, structured logic, or complex problem decomposition. The “better” choice depends on whether your priority is execution velocity or analytical depth.

2. Which model is better for large engineering teams?

For large teams working across multiple repositories and CI/CD pipelines, Gemini 3 Flash tends to scale more smoothly. Its ability to maintain context across large codebases and support real-time collaboration makes it more practical for high-volume engineering environments.

3. Which model is more cost-efficient for long-term use?

Gemini 3 Flash generally offers more predictable and lower operational costs at scale, especially for teams processing large volumes of code, logs, or documentation. GPT-5.2 can become more expensive as usage grows due to higher per-token costs and deeper reasoning overhead.

4. Which model works better for complex system design and architecture reviews?

GPT-5.2 is often better suited for deep architectural reasoning, system design discussions, and complex algorithmic evaluation. It excels when the task requires structured thinking and detailed explanation rather than rapid iteration.

5. Can both models be used together?

Yes. Many teams use Gemini 3 Flash for fast, continuous engineering workflows and GPT-5.2 for specialized reasoning or design validation. This hybrid approach balances speed, cost efficiency, and depth of analysis.

Software development is moving fast, and teams need tools that keep up. AI is becoming a core part of coding, debugging, and collaboration. In fact, 82% of developers report using ChatGPT or GPT models, with 29% using Bard/Gemini, showing just how widespread AI has become in engineering workflows.

But not all AI works the same way. Some models are built for speed and instant suggestions, while others focus on careful reasoning and complex problem-solving.

In this blog, we will explore Gemini 3 Flash and GPT-5.2, comparing performance, costs, and workflow fit to help teams pick the right AI in 2026.

Overview

Gemini 3 Flash is positioned as a lower-cost, faster-iteration option for high-volume engineering workflows where response speed and throughput matter.

GPT-5.2 is positioned as a higher-cost model where deeper reasoning and higher-accuracy outputs can justify slower iteration.

Listed API pricing (check vendor pages for updates): Gemini 3 Flash shows $0.50 per 1M input tokens and $3.00 per 1M output tokens.

GPT-5.2 shows $1.75 per 1M input tokens and $14.00 per 1M output tokens.

The best choice depends on what your team optimizes for: fast iteration and cost control, or deeper reasoning for higher-stakes engineering tasks.

Understanding Gemini 3 Flash and GPT-5.2

Gemini 3 Flash and GPT-5.2 are both advanced AI models, but they are built for distinct engineering priorities. Knowing their core strengths helps you choose the right model for coding, debugging, and workflow efficiency in 2026.

What Gemini 3 Flash Is Built For?

Gemini 3 Flash is engineered for speed and large-scale reasoning. It excels in handling multi-repo codebases, long dependency chains, and real-time PR analysis. Its architecture prioritizes low-latency execution, making it ideal for rapid iteration in IDEs.

You can feed it diagrams, logs, or traces alongside code, and it will interpret them contextually, enabling developers like you to catch integration issues before merging.

What GPT-5.2 Is Designed to Do?

GPT-5.2 is optimized for depth and precision in reasoning-heavy tasks. It shines in algorithmic problem solving, complex refactoring, and debugging intricate code flows.

While its throughput is slightly lower than Gemini 3 Flash, it maintains high logical accuracy, making it suitable for critical code review, architectural suggestions, and long-form documentation generation.

Now that we understand the core design and strengths of Gemini 3 Flash and GPT-5.2, let’s explore how they perform in real-time coding speed and latency.

Also Read: Building Engineering Efficiency: 9 Proven Strategies for High-Performing Teams

Speed and Latency: Which Model Feels Faster in Practice

When your team is shipping code daily, speed directly affects developer efficiency. Gemini 3 Flash and GPT-5.2 differ significantly in how they handle real-time workflows and feedback loops.

Response Time in Real Development Workflows

Gemini 3 Flash typically fits workflows where teams want fast back-and-forth: quick PR suggestions, iterative code edits, and rapid interpretation of logs or configs.

GPT-5.2 typically fits workflows where teams accept slower responses in exchange for more deliberate, reasoning-heavy outputs, like complex debugging, architecture review support, or careful refactoring plans.

Impact on Developer Velocity and Feedback Loops

Gemini 3 Flash: Boosts PR resolution, detects conflicts in real time, and reduces context switching between coding and review tools, boosting overall engineering throughput for fast-moving teams.

GPT-5.2: With GPT-5.2, you get detailed, highly accurate feedback that catches subtle issues, but slower response times can extend review cycles. It’s ideal when your priority is accuracy and code quality over sheer speed.

Having seen how fast each model responds in practical workflows, the next step is examining how they think, reason, and solve complex engineering problems.

Reasoning and Intelligence: How Smart Are They Really

When it comes to solving complex engineering problems, how a model thinks can determine your code quality, review speed, and debugging efficiency. Gemini 3 Flash and GPT-5.2 take different approaches to reasoning, impacting both everyday coding tasks and multi-step problem solving.

Logical Reasoning and Problem Solving

Gemini 3 Flash: Excels at real-time logical evaluation, spotting inconsistencies, detecting anti-patterns, and suggesting fixes across multiple modules simultaneously. Its reasoning is tuned to practical engineering scenarios, like dependency conflicts, merge errors, and optimization opportunities, giving developers actionable insights instantly.

GPT-5.2: You’ll get careful, step-by-step reasoning that’s ideal for algorithmic challenges, refactoring, and critical bug analysis. While it may take a bit longer to process, it surfaces deep logic errors and architectural issues you might miss in everyday workflows.

Handling Complex, Multi-Step Tasks

Gemini 3 Flash: Manages multi-stage code flows, from function implementation to integration, maintaining context across modules and delivering real-time suggestions for each step.

GPT-5.2: Handles intricate, multi-step tasks by breaking them down logically, giving you clear guidance on each step. This is perfect when tackling critical refactors or end-to-end algorithm design, where precision matters more than speed.

Once we grasp their reasoning abilities, it’s time to evaluate how Gemini 3 Flash and GPT-5.2 translate that intelligence into code generation, refactoring, and debugging.

Also Read: 15 Leadership Strategies for Scaling Engineering Teams That Actually Work

Coding and Developer Performance

How a model writes, refactors, and debugs code directly affects developer efficiency and software quality. Gemini 3 Flash and GPT-5.2 differ in output speed, accuracy, and integration with complex codebases.

Feature | Gemini 3 Flash | GPT-5.2 |

Code Generation | Fast, context-aware generation for multi-file projects. Suggestions align with repository standards and dependencies automatically. | Deep, logic-driven generation suitable for complex algorithms and intricate system designs. Slightly slower but ensures precise output. |

Refactoring Quality | Inline refactoring recommendations with real-time detection of anti-patterns and optimization opportunities. | Step-by-step guidance for critical refactors, highlighting architectural improvements and logic corrections. |

Debugging & Testing | Surfaces runtime issues, integration errors, and missing tests instantly, helping teams catch and reduce bugs before code merges. | Detects subtle logic errors, edge cases, and test coverage gaps. Supports creating detailed test cases for high-assurance codebases. |

Understanding output quality is important, but how these models maintain context across large codebases and lengthy sessions can make or break workflow efficiency.

Context Window and Long-Form Understanding

When engineering teams work with large repositories or lengthy coding sessions, how an AI model remembers context and maintains coherence can affect your productivity. Gemini 3 Flash and GPT-5.2 handle long-form workflows differently.

Gemini 3 Flash

Gemini 3 Flash maintains context across multi-module repositories and long PR chains, remembering dependencies, variable states, and prior comments in real time. It keeps coherence in extended coding sessions, reducing repeated explanations and enabling seamless multi-file edits.

GPT-5.2

GPT-5.2 handles long-form code and documentation well, but its session memory can truncate over very large repositories, requiring occasional context refreshes. It excels at stepwise reasoning across files, though maintaining multi-session coherence is slower compared to Gemini 3 Flash.

Beyond text, modern development requires interpreting diagrams, logs, and structured data. Let’s see how Gemini 3 Flash and GPT-5.2 handle multimodal inputs.

Multimodal Capabilities: Beyond Text

Interpreting diagrams, architecture visuals, logs, and structured data directly impacts your efficiency and code quality in engineering. Gemini 3 Flash and GPT-5.2 handle these non-text inputs in different ways.

Gemini 3 Flash

Gemini 3 Flash can interpret diagrams, UMLs, architecture schematics, and flowcharts directly in your workflow, while also parsing logs, JSON outputs, and other structured data. You get real-time, actionable insights without switching tools, keeping your engineering flow uninterrupted.

GPT-5.2

With GPT-5.2, you can analyze diagrams and visual inputs, but you’ll often need to structure data or provide extra context for accurate interpretation. Handling logs and non-text inputs works, yet maintaining precision across complex formats can require careful prompting on your side.

Having explored practical capabilities, we now quantify their performance through benchmarks. This way, you can understand measurable strengths and limitations for real engineering tasks.

Benchmarks and Measured Performance

Benchmarks provide quantifiable insight into how each model performs across coding, reasoning, and software-related tasks. The trick is using the right benchmarks for your workflow (agentic coding vs PR review vs log triage vs long-context refactors), and not over-trusting any single score.

Headline Benchmark Table (Gemini 3 Flash vs GPT-5.2):

What You Care About | Benchmark | Gemini 3 Flash (Thinking) | GPT-5.2 (High Reasoning) | How To Interpret This For Engineering |

|---|---|---|---|---|

Real repo bug-fixing (agentic coding) | SWE-bench Verified (single attempt) | 78.0% | 80.0% | Both are extremely strong for “take an issue + repo → produce a patch.” GPT edges slightly. |

Terminal-style debugging & CLI workflows | Terminal-Bench 2.0 | 47.6% | — | Gemini has a published score here; GPT-5.2 wasn’t listed in the same table. |

Long-horizon “do a bunch of tool steps” tasks | Toolathlon | 49.4% | 46.3% | Gemini leads; useful signal for multi-step agent workflows (tool calls, structured subtasks). |

Multi-step workflows via MCP | MCP Atlas | 57.4% | 60.6% | GPT leads; relevant if your workflow is MCP-heavy (connectors, multi-tool orchestration). |

Competitive programming / algorithmic coding | LiveCodeBench Pro (Elo) | 2316 | 2393 | GPT leads; better signal for algorithmic reasoning + tricky coding under constraints. |

Long-context “needle in haystack” reliability | MRCR v2 (128k avg, 8-needle) | 67.2% | 81.9% | GPT has a big edge; matters for very large repos, long incident docs, or multi-file refactors. |

UI/screen understanding | ScreenSpot-Pro | 69.1% | 86.3% (with Python) | GPT’s score shown here uses a tool; still a strong advantage for UI-driven debugging and “read the screen” tasks. |

Log/charts/figures reasoning | CharXiv Reasoning (no tools) | 80.3% | 82.1% | Very close; both solid for technical charts, traces, and figure-style reasoning. |

“Hard novelty” reasoning | ARC-AGI-2 | 33.6% | 52.9% | GPT leads; this is more abstract than day-to-day engineering, but correlates with tricky reasoning. |

Science-grade QA | GPQA Diamond (no tools) | 90.4% | 92.4% | Both are frontier-level; GPT slightly higher. |

Pricing (API list price) | Input / Output per 1M tokens | $0.50 / $3.00 | $1.75 / $14.00 | Gemini is dramatically cheaper on list price; this matters if you’re doing high-volume CI, support, or agent loops. |

Sources for the benchmark numbers and the per-token prices in this table come from Google DeepMind’s published Gemini 3 Flash evaluation table (which includes GPT-5.2 comparison columns).

GPT-5.2’s SWE-Bench Verified (80.0%) and SWE-Bench Pro (55.6%) are also reported by OpenAI.

Key Benchmark Results Explained

Gemini 3 Flash’s strongest “engineering” signals

Agentic workflow strength: Toolathlon is higher (49.4% vs 46.3%), indicating stronger long-horizon execution when an agent must plan and repeatedly call tools.

Cost for scale: $0.50/$3.00 per 1M input/output tokens makes it easier to run lots of evals, CI helpers, or always-on assistants without flinching at the bill.

SWE-bench Verified is already elite: 78% is “top-tier coding agent” territory.

GPT-5.2’s strongest “engineering” signals

Long-context reliability advantage: MRCR v2 shows a large lead (81.9% vs 67.2%), which matters when you stuff huge repos, long postmortems, or multi-incident context into a single run.

Algorithmic/competition coding edge: LiveCodeBench Pro Elo is higher (2393 vs 2316), matching the “structured algorithm + correctness” strength you’d feel in thorny logic and refactor correctness.

Top SWE-bench Verified score: 80% vs 78% is a small but real edge on the most common public repo-fix benchmark.

SWE-Bench Pro (multi-language, more industrial): OpenAI reports 55.6% on SWE-Bench Pro, which is useful if your stack isn’t Python-centric. (Google doesn’t publish a Gemini 3 Flash SWE-Bench Pro number in the sources above.)

What Benchmarks Do And Don’t Tell You

Benchmarks are useful, but they don’t match your reality: your repo structure, your build system, your code review culture, your incident patterns, and your internal tooling.

Use benchmarks as a filter, not a verdict. The final call should come from a short internal eval on your workflows:

PR review quality: Does it catch real bugs, suggest better abstractions, and avoid nitpicks?

Debugging accuracy: Can it resolve a few real past incidents using your logs/runbooks without inventing facts?

Refactor correctness: Can it change real modules safely (tests pass, interfaces consistent, no silent behavior change)?

Tool discipline: When it’s unsure, does it ask for the missing file/log, or does it guess?

Cost and Scalability of these Platforms

When running AI models for large engineering workflows, understanding per-token costs, output efficiency, and scalability is key to predicting your operational budget. This also helps in avoiding unexpected overspend. Let's learn the cost through the following table:

Pricing Metric | Gemini 3 Flash | GPT‑5.2 |

Input Tokens (per 1M) | $0.50 | $1.75 |

Output Tokens (per 1M) | $3.00 | $14.00 |

Context Caching | $0.05 / Cached | $0.175 / Cached |

Batch / Async Tiers | ~50% lower with batch options | Cached input discounts available |

For budgeting, estimate monthly tokens across your top workflows (IDE assist, PR reviews, incident RCA, docs). Then model the cost using:

Monthly input tokens × input rate

Monthly output tokens × output rate

Note that pricing may change; please verify against the vendor pages.

Tooling, Integrations, And Workflow Fit

In practice, workflow fit is determined less by Gemini 3 Flash vs GPT-5.2 as “models” and more by how you deploy either one: IDE extensions, PR bots, CI checks, incident workflows, and how well the setup connects to your repos and ticketing.

So instead of assuming “Gemini 3 Flash plugs in” or “GPT-5.2 needs manual setup,” evaluate the integration path you’ll actually use for whichever model you pick.

The integration layers that matter for both Gemini 3 Flash and GPT-5.2:

IDE layer: in-editor assistance (code suggestions, refactors, explanations)

PR layer: review summaries, risk flags, lint/test guidance, policy checks

CI/CD layer: build/test gates, deploy verification, rollback triggers

Incident layer: log/trace correlation, runbooks, postmortem capture

Work management layer: Jira/Linear linking, ownership routing, Slack notifications

If You Want Fast Iteration (Low Context Switching)

For teams using Gemini 3 Flash or GPT-5.2 primarily for high-frequency work (PR flow + IDE help), prioritise a setup that reduces context switching:

IDE + PR bot combo so developers don’t jump between tools

CI posts actionable summaries back into the PR (not raw logs)

A standard “incident packet” template (recent deploys + key logs + top metrics) so prompts stay short

This is the best path if your goal is shorter feedback loops and faster PR cycles, regardless of the model you choose.

If You Want High-Assurance Reasoning (Max Context Preservation)

For teams using Gemini 3 Flash or GPT-5.2 for deeper debugging, architecture reviews, and critical refactors, prioritise workflows that preserve full context and remain repeatable:

Provide repo context systematically (relevant files, configs, dependencies)

Use structured prompts (same sections every time: constraints, assumptions, risks, test plan)

Create repeatable evaluation prompts so you can compare outputs over time

Keep humans in the loop for high-impact decisions (merge approvals, prod rollbacks)

This is the best path when your priority is accuracy, traceability, and consistency.

Bottom line: comparing Gemini 3 Flash vs GPT-5.2 on “workflow fit” should focus on the deployment setup (IDE + PR + CI + incident flow) more than the raw model label.

Now that workflow integration is clear, enterprise considerations like security, stability, and operational maturity dictate how these models perform at scale.

Also Read: 10 Proven Best Practices for Managing Technical Debt in Distributed Teams

Enterprise Readiness and Production Use

Choosing an AI model for your engineering org needs to have security, stability, and operational reliability when integrating into production systems at scale. Here’s how both model fares:

Gemini 3 Flash

Gemini 3 Flash comes with enterprise-grade security controls, including role-based access, encrypted data handling, and audit logs for code and documentation. Its architecture supports high-availability deployment, automated failover, and predictable scaling, ensuring uninterrupted code reviews, CI/CD checks, and multi-team collaboration.

GPT‑5.2

GPT‑5.2 offers strong encryption and compliance features, but enterprise deployment often requires additional configuration for secure API usage and integration with internal systems. While reliable, scaling across large orgs or sensitive codebases may demand extra infrastructure and monitoring, especially for mission-critical workflows.

Once we’ve assessed security, scalability, and workflow fit, we can compare scenarios to decide which AI model aligns best with your team’s priorities.

Which Model Is Right for Your Team?

Picking the right AI model means matching features, speed, and workflow fit to your engineering team’s specific needs, scale, and goals.

When Gemini 3 Flash Makes More Sense

Your team works with large codebases and needs fast, context-aware code reviews.

You require seamless IDE and CI/CD integration with minimal setup.

Security and enterprise-grade governance are essential.

Cost predictability matters for high-volume token processing, especially in code analysis, debugging, or automated documentation.

When GPT‑5.2 Is the Better Choice

Your focus is on complex algorithmic reasoning or structured problem-solving beyond everyday code generation.

You can invest time in custom integrations and agent workflows.

Occasional higher costs are acceptable for advanced output quality or specialized tasks.

Teams value flexibility in prompt-driven outputs over turnkey IDE integration.

Having matched models to team needs, the challenge remains: how to unify outputs into actionable insights. That’s where Entelligence AI simplifies engineering intelligence across workflows.

Where Entelligence AI Fits in the Stack?

When comparing Gemini 3 Flash and GPT-5.2, the challenge is turning AI outputs into actionable code, documentation, and team insights. Engineers often juggle multiple tools, slowing reviews, debugging, and sprint tracking.

Entelligence AI unifies this process by layering AI intelligence into your engineering workflow, giving you clarity, speed, and operational control across the org without switching between tools.

Key Features of Entelligence AI:

Contextual Code Reviews: Highlights critical issues, test gaps, and anti-patterns directly in your IDE, improving the actionable value of both Gemini 3 Flash and GPT-5.2 outputs.

Automated Documentation: Generates real-time, multi-repo architecture diagrams, API docs, and code explanations that stay in sync with ongoing changes.

Security Dashboard: Continuously scans for vulnerabilities, misconfigurations, and dependency risks, keeping AI-generated code production-safe.

Sprint & Team Insights: Tracks task completion, blockers, and developer contributions, turning model outputs into measurable team performance data.

Background AI Agent: Automates repetitive tasks like linting, refactoring, and PR prep, freeing developers to focus on high-value coding work.

By integrating Entelligence AI with your chosen AI model, you get both cutting-edge AI reasoning and real-world workflow efficiency. This makes Gemini 3 Flash or GPT-5.2 outputs actionable and production-ready.

Final Verdict: Choosing the Right Intelligence Layer

In 2026, both Gemini 3 Flash and GPT‑5.2 offer impressive reasoning, coding, and multimodal capabilities. Gemini 3 Flash excels in context retention, multi-step reasoning, and workflow integration, while GPT‑5.2 shines in structured problem-solving and algorithmic complexity. Your choice ultimately depends on team scale, project type, and operational priorities.

Entelligence AI complements either model by turning AI outputs into actionable engineering intelligence. It unifies code quality, automated documentation, security, and team performance insights. This way, you can move faster, reduce errors, and maintain full visibility across projects without juggling multiple tools.

Ready to see how Entelligence AI can improve your AI workflows and unify Gemini 3 Flash or GPT‑5.2 outputs into actionable engineering insights? Book a demo today to experience the full platform in action.

FAQs

1. Which one is better, Gemini or GPT?

There’s no universal winner; it depends on what your team prioritizes. Gemini 3 Flash is better suited for engineering teams that need speed, large-context handling, and tight integration with codebases and workflows. GPT-5.2 performs better when tasks demand deep reasoning, structured logic, or complex problem decomposition. The “better” choice depends on whether your priority is execution velocity or analytical depth.

2. Which model is better for large engineering teams?

For large teams working across multiple repositories and CI/CD pipelines, Gemini 3 Flash tends to scale more smoothly. Its ability to maintain context across large codebases and support real-time collaboration makes it more practical for high-volume engineering environments.

3. Which model is more cost-efficient for long-term use?

Gemini 3 Flash generally offers more predictable and lower operational costs at scale, especially for teams processing large volumes of code, logs, or documentation. GPT-5.2 can become more expensive as usage grows due to higher per-token costs and deeper reasoning overhead.

4. Which model works better for complex system design and architecture reviews?

GPT-5.2 is often better suited for deep architectural reasoning, system design discussions, and complex algorithmic evaluation. It excels when the task requires structured thinking and detailed explanation rather than rapid iteration.

5. Can both models be used together?

Yes. Many teams use Gemini 3 Flash for fast, continuous engineering workflows and GPT-5.2 for specialized reasoning or design validation. This hybrid approach balances speed, cost efficiency, and depth of analysis.

We raised $5M to run your Engineering team on Autopilot

We raised $5M to run your Engineering team on Autopilot

Watch our launch video

Talk to Sales

Turn engineering signals into leadership decisions

Connect with our team to see how Entelliegnce helps engineering leaders with full visibility into sprint performance, Team insights & Product Delivery

Talk to Sales

Turn engineering signals into leadership decisions

Connect with our team to see how Entelliegnce helps engineering leaders with full visibility into sprint performance, Team insights & Product Delivery

Try Entelligence now